Singular Value Decomposition Previously we have seen that for n × n n\times n n × n A A A n n n

A = S Λ S − 1 \begin{matrix} A=S\Lambda S^{-1} \end{matrix} A = S Λ S − 1 Previously we have seen that if eigenvectors of

matrix A A A orthogonal then Q − 1 = Q T Q^{-1}=Q^T Q − 1 = Q T HERE )

A = Q Λ Q T \begin{matrix} A=Q\Lambda Q^{T} \end{matrix} A = Q Λ Q T But generally eigenvectors are not orthogonal.

Singular Value Decomposition(SVD) is a factorization of any m × n m\times n m × n

A = U Σ V T \begin{matrix} A=U\Sigma V^T \end{matrix} A = U Σ V T Say that we have a m × n m\times n m × n A A A Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r

A m × n = [ a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋮ ⋮ ⋱ ⋮ a m 1 a m 2 ⋯ a m n ] A_{m\times n}=\begin{bmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \\ \end{bmatrix} A m × n = ⎣ ⎡ a 11 a 21 ⋮ a m 1 a 12 a 22 ⋮ a m 2 ⋯ ⋯ ⋱ ⋯ a 1 n a 2 n ⋮ a mn ⎦ ⎤ \quad

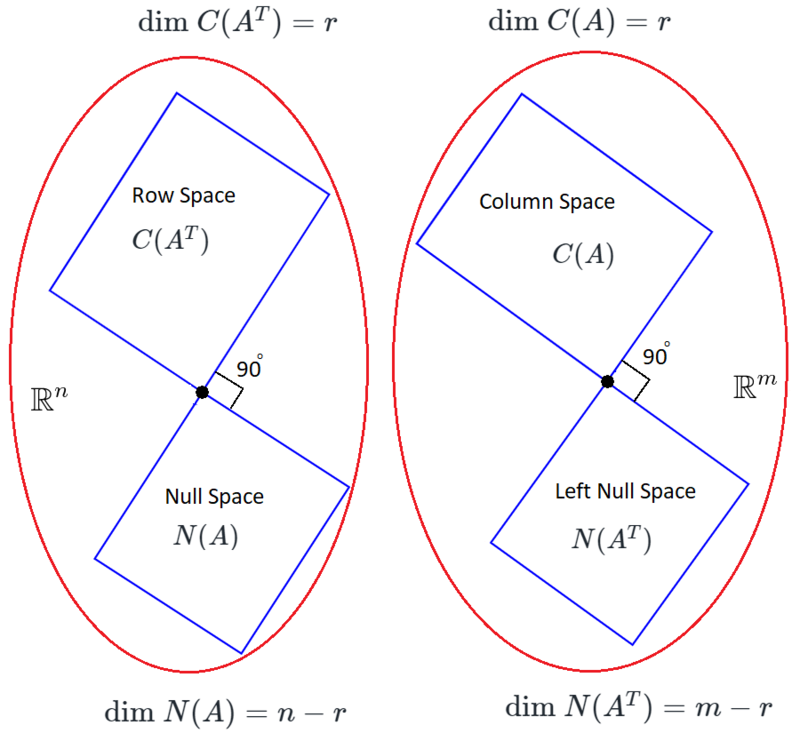

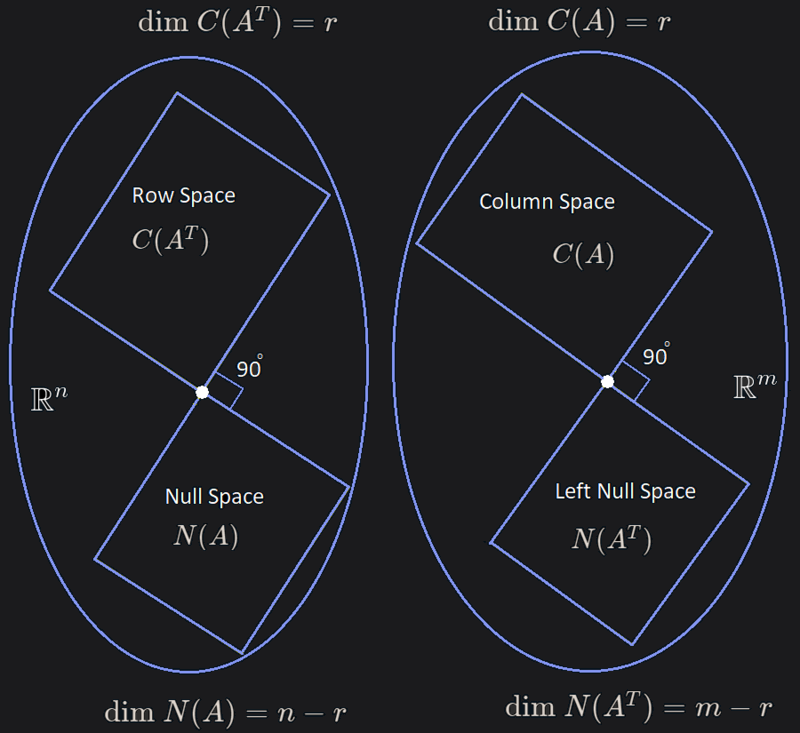

The row space is in R n \mathbb{R}^n R n

[ a 11 a 12 ⋮ a 1 n ] ∈ R n , [ a 21 a 22 ⋮ a 2 n ] ∈ R n , ⋯ , [ a m 1 a m 2 ⋮ a m n ] ∈ R n \begin{bmatrix} a_{11} \\ a_{12} \\ \vdots \\ a_{1n} \end{bmatrix}\in\mathbb{R}^n, \begin{bmatrix} a_{21} \\ a_{22} \\ \vdots \\ a_{2n} \end{bmatrix}\in\mathbb{R}^n, \cdots, \begin{bmatrix} a_{m1} \\ a_{m2} \\ \vdots \\ a_{mn} \end{bmatrix}\in\mathbb{R}^n ⎣ ⎡ a 11 a 12 ⋮ a 1 n ⎦ ⎤ ∈ R n , ⎣ ⎡ a 21 a 22 ⋮ a 2 n ⎦ ⎤ ∈ R n , ⋯ , ⎣ ⎡ a m 1 a m 2 ⋮ a mn ⎦ ⎤ ∈ R n The column space is in R m \mathbb{R}^m R m

[ a 11 a 21 ⋮ a m 1 ] ∈ R m , [ a 12 a 22 ⋮ a m 2 ] ∈ R m , ⋯ , [ a 1 n a 2 n ⋮ a m n ] ∈ R m \begin{bmatrix} a_{11} \\ a_{21} \\ \vdots \\ a_{m1} \end{bmatrix}\in\mathbb{R}^m, \begin{bmatrix} a_{12} \\ a_{22} \\ \vdots \\ a_{m2} \end{bmatrix}\in\mathbb{R}^m, \cdots, \begin{bmatrix} a_{1n} \\ a_{2n} \\ \vdots \\ a_{mn} \end{bmatrix}\in\mathbb{R}^m ⎣ ⎡ a 11 a 21 ⋮ a m 1 ⎦ ⎤ ∈ R m , ⎣ ⎡ a 12 a 22 ⋮ a m 2 ⎦ ⎤ ∈ R m , ⋯ , ⎣ ⎡ a 1 n a 2 n ⋮ a mn ⎦ ⎤ ∈ R m A vector (say) v ⃗ ∈ R n \vec{v}\in\mathbb{R}^n v ∈ R n Row Space and some in Left Null Space ,

when we transform that vector v ⃗ ∈ R n \vec{v}\in\mathbb{R}^n v ∈ R n A A A A v ⃗ = u ⃗ ∈ R m A\vec{v}=\vec{u}\in\mathbb{R}^m A v = u ∈ R m u ⃗ ∈ R m \vec{u}\in\mathbb{R}^m u ∈ R m Column Space and some in Null Space u ⃗ = u ⃗ p + u ⃗ n \vec{u}=\vec{u}_p+\vec{u}_n u = u p + u n [Reference]

● Row space ( C ( A T ) ) (C(A^T)) ( C ( A T ))

Row vectors lives in n n n Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r r r r r r r (Row Space) , so to span this whole

r r r r r r basis vectors .orthonormal basis vectors.

( we can get them using Gram Schmidt method,

but here we will use A T A A^TA A T A orthonormal basis vector for Row Space are v ⃗ 1 , v ⃗ 2 , ⋯ , v ⃗ r \vec{v}_1,\vec{v}_2,\cdots,\vec{v}_r v 1 , v 2 , ⋯ , v r

● Null Space ( N ( A ) ) (N(A)) ( N ( A ))

Null Space is perpendicular to Row Space (we talked about orthogonal subspaces HERE ),

so every vector in Null Space is perpendicular to Row Space.Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r n − r n-r n − r ( n − r ) (n-r) ( n − r ) (Null Space) , so to span this whole

( n − r ) (n-r) ( n − r ) n − r n-r n − r ( C ( A ) ) (C(A)) ( C ( A )) orthonormal basis vector for Null Space are v ⃗ r + 1 , v ⃗ r + 2 , ⋯ , v ⃗ n \vec{v}_{r+1},\vec{v}_{r+2},\cdots,\vec{v}_n v r + 1 , v r + 2 , ⋯ , v n

● Column Space ( C ( A ) ) (C(A)) ( C ( A )) Row Space to Column Space )

After we got our orthonormal basis vector for Row Space v ⃗ 1 , v ⃗ 2 , ⋯ , v ⃗ n \vec{v}_1,\vec{v}_2,\cdots,\vec{v}_n v 1 , v 2 , ⋯ , v n Column Space using matrix A A A Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r

A v ⃗ i = σ i u ⃗ i ; ∀ i ∈ { 1 , 2 , ⋯ , r } \begin{matrix} A\vec{v}_i=\sigma_i\vec{u}_i;\quad\forall i\in\{1,2,\cdots,r\} \end{matrix} A v i = σ i u i ; ∀ i ∈ { 1 , 2 , ⋯ , r } For i ∈ { 1 , 2 , ⋯ , r } i\in\{1,2,\cdots,r\} i ∈ { 1 , 2 , ⋯ , r } A A A v ⃗ i \vec{v}_i v i σ i u ⃗ i \sigma_i\vec{u}_i σ i u i u ⃗ i \vec{u}_i u i σ i \sigma_i σ i A v ⃗ i A\vec{v}_i A v i orthonormal basis vector for our column space u ⃗ 1 , u ⃗ 2 , ⋯ , u ⃗ r \vec{u}_1,\vec{u}_2,\cdots,\vec{u}_r u 1 , u 2 , ⋯ , u r

A v ⃗ i = 0 ⃗ ; ∀ i ∈ { r + 1 , r + 2 , ⋯ , n } \begin{matrix} A\vec{v}_i=\vec{0};\quad\forall i\in\{r+1,r+2,\cdots,n\} \end{matrix} A v i = 0 ; ∀ i ∈ { r + 1 , r + 2 , ⋯ , n } For i ∈ { r + 1 , r + 2 , ⋯ , n } i\in\{r+1,r+2,\cdots,n\} i ∈ { r + 1 , r + 2 , ⋯ , n } v ⃗ i \vec{v}_i v i Null Space of A A A u ⃗ r + 1 , u ⃗ r + 2 , ⋯ , u ⃗ n \vec{u}_{r+1},\vec{u}_{r+2},\cdots,\vec{u}_n u r + 1 , u r + 2 , ⋯ , u n 0 ⃗ \vec{0} 0

But wait WHY all u ⃗ i \vec{u}_i u i A T A A^TA A T A

● Left Null Space ( N ( A T ) ) (N(A^T)) ( N ( A T )) Null Space to Left Null Space )

Left Null Space is perpendicular to Column Space (we talked about orthogonal subspaces HERE ),

so every vector in Left Null Space is perpendicular to Column Space.Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r m − r m-r m − r ( m − r ) (m-r) ( m − r ) (Null Space) , so to span this whole

( m − r ) (m-r) ( m − r ) m − r m-r m − r orthonormal basis vector for Left Null Space are u ⃗ r + 1 , u ⃗ r + 2 , ⋯ , u ⃗ n \vec{u}_{r+1},\vec{u}_{r+2},\cdots,\vec{u}_n u r + 1 , u r + 2 , ⋯ , u n

● Terminologies v ⃗ 1 , v ⃗ 2 , ⋯ , v ⃗ r \vec{v}_1,\vec{v}_2,\cdots,\vec{v}_r v 1 , v 2 , ⋯ , v r Row Space ( C ( A T ) ) (C(A^T)) ( C ( A T )) ,v ⃗ r + 1 , v ⃗ r + 2 , ⋯ , v ⃗ n \vec{v}_{r+1},\vec{v}_{r+2},\cdots,\vec{v}_n v r + 1 , v r + 2 , ⋯ , v n Null Space ( N ( A T ) ) (N(A^T)) ( N ( A T ))

(say) V ′ = [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ r ⋮ ⋮ ⋮ ⋮ ⋮ ⋮ v ⃗ r + 1 v ⃗ r + 2 ⋯ v ⃗ n ⋮ ⋮ ⋮ ] n × n (say) U ′ = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ r ⋮ ⋮ ⋮ ⋮ ⋮ ⋮ u ⃗ r + 1 u ⃗ r + 2 ⋯ u ⃗ n ⋮ ⏟ = 0 ⃗ ⋮ ⏟ = 0 ⃗ ⋮ ⏟ = 0 ⃗ ] m × n (say) Σ ′ = [ [ σ 1 0 σ 2 ⋱ 0 σ r ] r × r 0 r × ( n − r ) 0 ( n − r ) × r 0 ( n − r ) × ( n − r ) ] n × n \text{(say)} \mathbf{V}'= \begin{bmatrix} \begin{matrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_r \\ \vdots & \vdots & & \vdots \end{matrix} & \begin{matrix} \vdots & \vdots & & \vdots \\ \vec{v}_{r+1} & \vec{v}_{r+2} & \cdots & \vec{v}_n \\ \vdots & \vdots & & \vdots \end{matrix} \end{bmatrix}_{n\times n} \\ \text{(say)} \mathbf{U}'= \begin{bmatrix} \begin{matrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_r \\ \vdots & \vdots & & \vdots \end{matrix} & \begin{matrix} \vdots & \vdots & & \vdots \\ \vec{u}_{r+1} & \vec{u}_{r+2} & \cdots & \vec{u}_n \\ \underbrace{\vdots}_{=\vec{0}} & \underbrace{\vdots}_{=\vec{0}} & & \underbrace{\vdots}_{=\vec{0}} \end{matrix} \end{bmatrix}_{m\times n} \\ \text{(say)} \Sigma'= \begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_r \\ \end {bmatrix}_{r\times r} & \mathbf{0}_{r\times (n-r)} \\ \mathbf{0}_{(n-r)\times r} & \mathbf{0}_{(n-r)\times (n-r)} \end{bmatrix}_{n\times n} (say) V ′ = ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v r ⋮ ⋮ v r + 1 ⋮ ⋮ v r + 2 ⋮ ⋯ ⋮ v n ⋮ ⎦ ⎤ n × n (say) U ′ = ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u r ⋮ ⋮ u r + 1 = 0 ⋮ ⋮ u r + 2 = 0 ⋮ ⋯ ⋮ u n = 0 ⋮ ⎦ ⎤ m × n (say) Σ ′ = ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ r ⎦ ⎤ r × r 0 ( n − r ) × r 0 r × ( n − r ) 0 ( n − r ) × ( n − r ) ⎦ ⎤ n × n Mapping from R n \mathbb{R}^n R n R m \mathbb{R}^m R m

A v ⃗ i = σ i u ⃗ i ; ∀ i ∈ { 1 , 2 , ⋯ , n } \begin{matrix} A\vec{v}_i=\sigma_i\vec{u}_i;\quad\forall i\in\{1,2,\cdots,n\} \end{matrix} A v i = σ i u i ; ∀ i ∈ { 1 , 2 , ⋯ , n } We can Write it as,

A [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ n ⋮ ⋮ ⋮ ] ⏟ V n × n ′ = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ n ⋮ ⋮ ⋮ ] ⏟ U m × n ′ [ [ σ 1 0 σ 2 ⋱ 0 σ r ] r × r 0 r × ( n − r ) 0 ( n − r ) × r 0 ( n − r ) × ( n − r ) ] ⏟ Σ n × n ′ A\underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ V'_{_{n\times n}} } = \underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ U'_{m\times n} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_r \\ \end {bmatrix}_{r\times r} & \mathbf{0}_{r\times (n-r)} \\ \mathbf{0}_{(n-r)\times r} & \mathbf{0}_{(n-r)\times (n-r)} \end {bmatrix}}_{ \Sigma'_{n\times n} } A V n × n ′ ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v n ⋮ ⎦ ⎤ = U m × n ′ ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u n ⋮ ⎦ ⎤ Σ n × n ′ ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ r ⎦ ⎤ r × r 0 ( n − r ) × r 0 r × ( n − r ) 0 ( n − r ) × ( n − r ) ⎦ ⎤ So,

A V n × n ′ = U m × n ′ Σ n × n ′ AV'_{n\times n}=U'_{m\times n}\Sigma'_{n\times n} A V n × n ′ = U m × n ′ Σ n × n ′ Say that all columns of our matrix A A A ⇒ Rank ( A ) = m \Rightarrow \text{Rank}(A)=m ⇒ Rank ( A ) = m

Dimension of Row Space is: m m m

Dimension of Null Space is: n − m n-m n − m

Dimension of Column Space is: m m m

Dimension of Left Null Space is: m − m = 0 m-m=0 m − m = 0

A [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ n ⋮ ⋮ ⋮ ] ⏟ V n × n ′ = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ n ⋮ ⋮ ⋮ ] ⏟ U m × n ′ [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 m × ( n − m ) 0 ( n − m ) × m 0 ( n − m ) × ( n − m ) ] ⏟ Σ n × n ′ A\underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ V'_{_{n\times n}} } = \underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ U'_{m\times n} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \\ \mathbf{0}_{(n-m)\times m} & \mathbf{0}_{(n-m)\times (n-m)} \end {bmatrix}}_{ \Sigma'_{n\times n} } A V n × n ′ ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v n ⋮ ⎦ ⎤ = U m × n ′ ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u n ⋮ ⎦ ⎤ Σ n × n ′ ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 ( n − m ) × m 0 m × ( n − m ) 0 ( n − m ) × ( n − m ) ⎦ ⎤ So,

A V n × n ′ = U m × n ′ Σ n × n ′ AV'_{n\times n}=U'_{m\times n}\Sigma'_{n\times n} A V n × n ′ = U m × n ′ Σ n × n ′ And as we discussed above that u ⃗ r + 1 , u ⃗ r + 2 , ⋯ , u ⃗ n \vec{u}_{r+1},\vec{u}_{r+2},\cdots,\vec{u}_n u r + 1 , u r + 2 , ⋯ , u n 0 ⃗ \vec{0} 0

A [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ n ⋮ ⋮ ⋮ ] ⏟ V n × n = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ m ⋮ ⋮ ⋮ ] ⏟ U m × m [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 m × ( n − m ) ] ⏟ Σ m × n A\underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ V_{_{n\times n}} } = \underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_m \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ U_{m\times m} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \end {bmatrix}}_{ \Sigma_{m\times n} } A V n × n ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v n ⋮ ⎦ ⎤ = U m × m ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u m ⋮ ⎦ ⎤ Σ m × n ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 m × ( n − m ) ⎦ ⎤ So,

A m × n V n × n = U m × m Σ m × n \begin{matrix} A_{m\times n}V_{n\times n}=U_{m\times m}\Sigma_{m\times n} \end{matrix} A m × n V n × n = U m × m Σ m × n This equation is what we call Full SVD

If some of the columns of our matrix A A A Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r

Dimension of Row Space is: r r r

Dimension of Null Space is: n − r n-r n − r

Dimension of Column Space is: r r r

Dimension of Left Null Space is: m − r m-r m − r

A [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ n ⋮ ⋮ ⋮ ] ⏟ V n × n ′ = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ n ⋮ ⋮ ⋮ ] ⏟ U m × n ′ [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 m × ( n − m ) 0 ( n − m ) × m 0 ( n − m ) × ( n − m ) ] ⏟ Σ n × n ′ A\underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ V'_{_{n\times n}} } = \underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_n \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ U'_{m\times n} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \\ \mathbf{0}_{(n-m)\times m} & \mathbf{0}_{(n-m)\times (n-m)} \end {bmatrix}}_{ \Sigma'_{n\times n} } A V n × n ′ ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v n ⋮ ⎦ ⎤ = U m × n ′ ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u n ⋮ ⎦ ⎤ Σ n × n ′ ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 ( n − m ) × m 0 m × ( n − m ) 0 ( n − m ) × ( n − m ) ⎦ ⎤ So,

A V n × n ′ = U m × n ′ Σ n × n ′ AV'_{n\times n}=U'_{m\times n}\Sigma'_{n\times n} A V n × n ′ = U m × n ′ Σ n × n ′ And as we discussed above that v ⃗ r + 1 , u ⃗ r + 2 , ⋯ , u ⃗ n \vec{v}_{r+1},\vec{u}_{r+2},\cdots,\vec{u}_n v r + 1 , u r + 2 , ⋯ , u n 0 ⃗ \vec{0} 0

A [ ⋮ ⋮ ⋮ v ⃗ 1 v ⃗ 2 ⋯ v ⃗ r ⋮ ⋮ ⋮ ] ⏟ V n × r = [ ⋮ ⋮ ⋮ u ⃗ 1 u ⃗ 2 ⋯ u ⃗ r ⋮ ⋮ ⋮ ] ⏟ U m × r [ σ 1 0 σ 2 ⋱ 0 σ r ] ⏟ Σ r × r A\underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_r \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ V_{_{n\times r}} } = \underbrace{\begin{bmatrix} \vdots & \vdots & & \vdots \\ \vec{u}_1 & \vec{u}_2 & \cdots & \vec{u}_r \\ \vdots & \vdots & & \vdots \end{bmatrix}}_{ U_{m\times r} } \underbrace{\begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_r \\ \end {bmatrix}}_{ \Sigma_{r\times r} } A V n × r ⎣ ⎡ ⋮ v 1 ⋮ ⋮ v 2 ⋮ ⋯ ⋮ v r ⋮ ⎦ ⎤ = U m × r ⎣ ⎡ ⋮ u 1 ⋮ ⋮ u 2 ⋮ ⋯ ⋮ u r ⋮ ⎦ ⎤ Σ r × r ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ r ⎦ ⎤ So,

A m × n V n × r = U m × r Σ r × r \begin{matrix} A_{m\times n}V_{n\times r}=U_{m\times r}\Sigma_{r\times r} \end{matrix} A m × n V n × r = U m × r Σ r × r This equation is what we call Reduced SVD

We have seen that we can write any m × n m\times n m × n A A A A V = U Σ AV=U\Sigma A V = U Σ V V V U U U Orthonormal matrix.V V V U U U Orthonormal matrix.V V V U U U Orthonormal matrix then we can find A A A A V = U Σ AV=U\Sigma A V = U Σ A = U Σ V − 1 A=U\Sigma V^{-1} A = U Σ V − 1 V V V V − 1 = V T V^{-1}=V^T V − 1 = V T V V V Full SVD method, then we get

A m × n = U m × m Σ m × n V n × n T \begin{matrix} A_{m\times n}=U_{m\times m}\Sigma_{m\times n}V_{n\times n}^{T} \end{matrix} A m × n = U m × m Σ m × n V n × n T Everything looks good but how to find these V V V U U U Orthonormal matrix.

IDEA: V V V U U U A T A A^TA A T A A A T AA^T A A T

Row space of A A A A T A A^TA A T A It is easy to find the basis for Row space of A T A A^TA A T A A A A A T A A^TA A T A Explanation:

A m × n = [ a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋮ ⋮ ⋱ ⋮ a m 1 a m 2 ⋯ a m n ] A_{m\times n}=\begin{bmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \\ \end{bmatrix} A m × n = ⎣ ⎡ a 11 a 21 ⋮ a m 1 a 12 a 22 ⋮ a m 2 ⋯ ⋯ ⋱ ⋯ a 1 n a 2 n ⋮ a mn ⎦ ⎤ A T A A^TA A T A A T A = A T [ a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋮ ⋮ ⋱ ⋮ a m 1 a m 2 ⋯ a m n ] A^TA=A^T\begin{bmatrix} a_{11} & a_{12} & \cdots & a_{1n} \\ a_{21} & a_{22} & \cdots & a_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m1} & a_{m2} & \cdots & a_{mn} \\ \end{bmatrix} A T A = A T ⎣ ⎡ a 11 a 21 ⋮ a m 1 a 12 a 22 ⋮ a m 2 ⋯ ⋯ ⋱ ⋯ a 1 n a 2 n ⋮ a mn ⎦ ⎤

Here we can see that we are mapping the row vectors of A A A A T A^T A T

And if Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r ⇒ Rank ( A T ) = r \Rightarrow \text{Rank}(A^T)=r ⇒ Rank ( A T ) = r A A A A T A^T A T r r r

So when we map row vector of A A A A T A^T A T r r r Rank ( A T A ) = r \text{Rank}(A^TA)=r Rank ( A T A ) = r

Now we can say that Row Space of A A A A T A A^TA A T A

( A T A ) T = A T A ⇒ A T A (A^TA)^T=A^TA \Rightarrow A^TA ( A T A ) T = A T A ⇒ A T A A T A = A^TA= A T A = A T A = A^TA= A T A = A A A

Column Space of A A A A A T AA^T A A T It is easy to find the basis for Column Space of A A T AA^T A A T A A A A A T AA^T A A T Explanation: A m × n T = [ a 11 a 21 ⋯ a m 1 a 12 a 22 ⋯ a m 2 ⋮ ⋮ ⋱ ⋮ a 1 n a 2 n ⋯ a m n ] A^T_{m\times n}=\begin{bmatrix} a_{11} & a_{21} & \cdots & a_{m1} \\ a_{12} & a_{22} & \cdots & a_{m2} \\ \vdots & \vdots & \ddots & \vdots \\ a_{1n} & a_{2n} & \cdots & a_{mn} \\ \end{bmatrix} A m × n T = ⎣ ⎡ a 11 a 12 ⋮ a 1 n a 21 a 22 ⋮ a 2 n ⋯ ⋯ ⋱ ⋯ a m 1 a m 2 ⋮ a mn ⎦ ⎤ A A T AA^T A A T A A T = A [ a 11 a 21 ⋯ a m 1 a 12 a 22 ⋯ a m 2 ⋮ ⋮ ⋱ ⋮ a 1 n a 2 n ⋯ a m n ] AA^T=A\begin{bmatrix} a_{11} & a_{21} & \cdots & a_{m1} \\ a_{12} & a_{22} & \cdots & a_{m2} \\ \vdots & \vdots & \ddots & \vdots \\ a_{1n} & a_{2n} & \cdots & a_{mn} \\ \end{bmatrix} A A T = A ⎣ ⎡ a 11 a 12 ⋮ a 1 n a 21 a 22 ⋮ a 2 n ⋯ ⋯ ⋱ ⋯ a m 1 a m 2 ⋮ a mn ⎦ ⎤

Here we can see that we are mapping the column vectors of A T A^T A T A A A

And if Rank ( A ) = r \text{Rank}(A)=r Rank ( A ) = r ⇒ Rank ( A T ) = r \Rightarrow \text{Rank}(A^T)=r ⇒ Rank ( A T ) = r A A A A T A^T A T r r r

So when we map column vector of A T A^T A T A A A r r r Rank ( A A T ) = r \text{Rank}(AA^T)=r Rank ( A A T ) = r

Now we can say that Column Space of A A A A A T AA^T A A T

( A A T ) T = A A T ⇒ A A T (AA^T)^T=AA^T \Rightarrow AA^T ( A A T ) T = A A T ⇒ A A T A A T = AA^T= A A T = A A T = AA^T= A A T = A A A

We discussed that Row space of A A A A T A A^TA A T A A T A A^TA A T A

A m × n = U m × m Σ m × n V n × n T A_{m\times n}=U_{m\times m}\Sigma_{m\times n}V_{n\times n}^{T} A m × n = U m × m Σ m × n V n × n T ( Σ m × n T = Σ n × m ) (\Sigma^T_{m\times n}=\Sigma_{n\times m}) ( Σ m × n T = Σ n × m ) ⇒ ( A T ) n × m = V n × n Σ n × m U m × m T \Rightarrow (A^T)_{n\times m} = V_{n\times n}\Sigma_{n\times m}U_{m\times m}^{T} ⇒ ( A T ) n × m = V n × n Σ n × m U m × m T

⇒ ( A T ) n × m A m × n = V n × n Σ n × m U m × m T U m × m ⏟ = I m Σ m × n V n × n T \Rightarrow (A^T)_{n\times m}A_{m\times n} = V_{n\times n}\Sigma_{n\times m} \underbrace{U_{m\times m}^{T} U_{m\times m}}_{=\mathcal{I}_m} \Sigma_{m\times n}V_{n\times n}^{T} ⇒ ( A T ) n × m A m × n = V n × n Σ n × m = I m U m × m T U m × m Σ m × n V n × n T Σ n × m Σ m × n = [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 ( n − m ) × m ] ⏟ Σ n × m [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 m × ( n − m ) ] ⏟ Σ m × n \Sigma_{n\times m}\Sigma_{m\times n}= \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} \\ \mathbf{0}_{(n-m)\times m} \end {bmatrix}}_{ \Sigma_{n\times m} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \end {bmatrix}}_{ \Sigma_{m\times n} } Σ n × m Σ m × n = Σ n × m ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 ( n − m ) × m ⎦ ⎤ Σ m × n ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 m × ( n − m ) ⎦ ⎤ Σ n × m Σ m × n = [ [ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ] m × m 0 m × ( n − m ) 0 ( n − m ) × m 0 ( n − m ) × ( n − m ) ] ⏟ Σ n × n 2 = Σ n × n 2 \Sigma_{n\times m}\Sigma_{m\times n}= \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1^2 & & & \huge0 \\ & \sigma_2^2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m^2 \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \\ \mathbf{0}_{(n-m)\times m} & \mathbf{0}_{(n-m)\times (n-m)} \\ \end{bmatrix}}_{ \Sigma^2_{n\times n}} = \Sigma^2_{n\times n} Σ n × m Σ m × n = Σ n × n 2 ⎣ ⎡ ⎣ ⎡ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ⎦ ⎤ m × m 0 ( n − m ) × m 0 m × ( n − m ) 0 ( n − m ) × ( n − m ) ⎦ ⎤ = Σ n × n 2 Σ n × n 2 = [ [ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ] m × m 0 m × ( n − m ) 0 ( n − m ) × m 0 ( n − m ) × ( n − m ) ] n × n \Sigma^2_{n\times n}= \begin{bmatrix} \begin{bmatrix} \sigma_1^2 & & & \huge0 \\ & \sigma_2^2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m^2 \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \\ \mathbf{0}_{(n-m)\times m} & \mathbf{0}_{(n-m)\times (n-m)} \\ \end{bmatrix}_{n\times n} Σ n × n 2 = ⎣ ⎡ ⎣ ⎡ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ⎦ ⎤ m × m 0 ( n − m ) × m 0 m × ( n − m ) 0 ( n − m ) × ( n − m ) ⎦ ⎤ n × n ⇒ ( A T ) n × m A m × n = V n × n Σ n × n 2 V n × n T \Rightarrow (A^T)_{n\times m}A_{m\times n} = V_{n\times n}\Sigma^2_{n\times n}V_{n\times n}^{T} ⇒ ( A T ) n × m A m × n = V n × n Σ n × n 2 V n × n T 3 3 3 0 \mathbf{0} 0

0 m × ( n − m ) \mathbf{0}_{m\times (n-m)} 0 m × ( n − m ) 0 ( n − m ) × m \mathbf{0}_{(n-m)\times m} 0 ( n − m ) × m 0 ( n − m ) × ( n − m ) \mathbf{0}_{(n-m)\times (n-m)} 0 ( n − m ) × ( n − m ) Rank ( A ) = m \text{Rank}(A)=m Rank ( A ) = m n − m n-m n − m 0 \mathbf{0} 0 A T A A^TA A T A

Columns of V V V eigenvectors of matrix A T A A^TA A T A σ i 2 \sigma_i^2 σ i 2 i t h i^{th} i t h eigenvalue of matrix A T A A^TA A T A

We discussed that Column Space of A A A A A T AA^T A A T A A T AA^T A A T

A m × n = U m × m Σ m × n V n × n T A_{m\times n}=U_{m\times m}\Sigma_{m\times n}V_{n\times n}^{T} A m × n = U m × m Σ m × n V n × n T ( Σ m × n T = Σ n × m ) (\Sigma^T_{m\times n}=\Sigma_{n\times m}) ( Σ m × n T = Σ n × m ) ⇒ ( A T ) n × m = V n × n Σ n × m U m × m T \Rightarrow (A^T)_{n\times m} = V_{n\times n}\Sigma_{n\times m}U_{m\times m}^{T} ⇒ ( A T ) n × m = V n × n Σ n × m U m × m T ⇒ A m × n ( A T ) n × m = U m × m Σ m × n V n × n T V n × n ⏟ = I n Σ n × m U m × m T \Rightarrow A_{m\times n}(A^T)_{n\times m} = U_{m\times m}\Sigma_{m\times n} \underbrace{V_{n\times n}^{T} V_{n\times n}}_{=\mathcal{I}_n} \Sigma_{n\times m}U_{m\times m}^{T} ⇒ A m × n ( A T ) n × m = U m × m Σ m × n = I n V n × n T V n × n Σ n × m U m × m T

Σ m × n Σ n × m = [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 m × ( n − m ) ] ⏟ Σ m × n [ [ σ 1 0 σ 2 ⋱ 0 σ m ] m × m 0 ( n − m ) × m ] ⏟ Σ n × m \Sigma_{m\times n}\Sigma_{n\times m}= \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} & \mathbf{0}_{m\times (n-m)} \end {bmatrix}}_{ \Sigma_{m\times n} } \underbrace{\begin{bmatrix} \begin{bmatrix} \sigma_1 & & & \huge0 \\ & \sigma_2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m \\ \end {bmatrix}_{m\times m} \\ \mathbf{0}_{(n-m)\times m} \end {bmatrix}}_{ \Sigma_{n\times m} } Σ m × n Σ n × m = Σ m × n ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 m × ( n − m ) ⎦ ⎤ Σ n × m ⎣ ⎡ ⎣ ⎡ σ 1 0 σ 2 ⋱ 0 σ m ⎦ ⎤ m × m 0 ( n − m ) × m ⎦ ⎤ Σ n × m Σ m × n = [ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ] ⏟ Σ m × m 2 = Σ m × m 2 \Sigma_{n\times m}\Sigma_{m\times n}= \underbrace{\begin{bmatrix} \sigma_1^2 & & & \huge0 \\ & \sigma_2^2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m^2 \\ \end{bmatrix}}_{ \Sigma^2_{m\times m}} = \Sigma^2_{m\times m} Σ n × m Σ m × n = Σ m × m 2 ⎣ ⎡ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ⎦ ⎤ = Σ m × m 2 Σ m × m 2 = [ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ] m × m \Sigma^2_{m\times m}= \begin{bmatrix} \sigma_1^2 & & & \huge0 \\ & \sigma_2^2 & & \\ & & \ddots & \\ \huge0 & & & \sigma_m^2 \\ \end{bmatrix}_{m\times m} Σ m × m 2 = ⎣ ⎡ σ 1 2 0 σ 2 2 ⋱ 0 σ m 2 ⎦ ⎤ m × m ⇒ A m × n ( A T ) n × m = U m × m Σ m × m 2 U m × m T \Rightarrow A_{m\times n}(A^T)_{n\times m} = U_{m\times m}\Sigma^2_{m\times m}U_{m\times m}^{T} ⇒ A m × n ( A T ) n × m = U m × m Σ m × m 2 U m × m T 0 \mathbf{0} 0 Rank ( A ) = m \text{Rank}(A)=m Rank ( A ) = m U U U

A A T AA^T A A T

Columns of U U U eigenvectors of matrix A A T AA^T A A T σ i 2 \sigma_i^2 σ i 2 i t h i^{th} i t h eigenvalue of matrix A A T AA^T A A T